You are reading "the Zuulcat Archives". These are publications written by Martin Mazur between 2006 - 2015.

Future writings will be published on mazur.today.

The Zuulcat Archives

Estimating in Trees, Probing the Unknown

Feb 13, 2009 | Filed under: Software Development | Tags: Agile, Estimation, Planning

One of the biggest problems for me ever since i started doing professional software development has been estimation.

Back in the dark ages we used to get a huge spec and spend a couple of weeks going through it estimating every feature in hours. We did this because the business people SAID they wanted to know the hours.

This obviously didn't work since we always got it wrong. When I started thinking agile I learnt the trick of relative estimates; this revolutionized everything. The problem was that while we changed, the business stayed the same. In order for us to tell someone how long something would take we still needed a initial backlog. Usually it takes some time to spec a system, even in stories and honestly doesn't this feel a lot like big spec upfront revamped?

I figured that there has to be a better way to solve this, all we really want is a rough estimate between here and there nothing to detailed. A educated guess.

At Öredev 2007 someone (can't remember who) told be about estimating in trees. This is basically the idea of relative estimates applied iteratively over and over again. When estimating in trees we work with different levels of granularity and drill down at certain spots to probe how big something might be.

What we try do is split the problem into smaller and smaller chunks and weigh them relative to each other until we find us at a comfortable level of granularity.

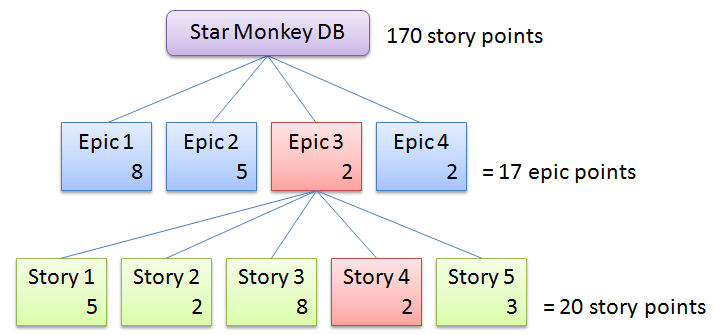

Let's demonstrate. Assume we are building the application, call it "Star Monkey DB", our first level might be the application itself.

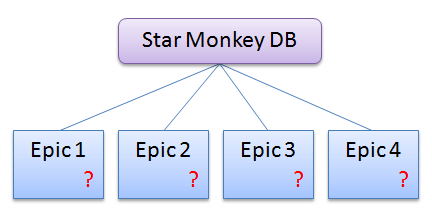

For the next level I like to use epics, but you could also use wireframes or use cases. Anything that is smaller than the previous level.

Now we estimate! Of course we do this using relative estimates and preferably with the whole team present. Pick a reference item (here Epic 3) set it to two epic points and estimate the rest in relation to that epic using your favorite estimating technique.

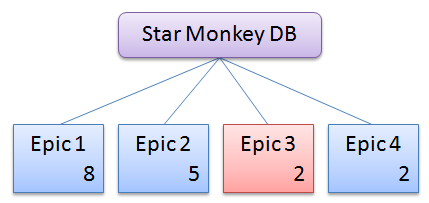

Now take your reference item and break it down to stories.

Estimate again! Pick a reference story, set it to two story points and estimate all the other stories relative that one.

Now we know that 2 epic points are 20 story points; this means that the Star Monkey DB would take, roughly, 170 story points to build. If your team has a established velocity your done. If not you'll have to do a estimated velocity to find out how many iterations you think you need.

This works well when estimating something that does not have a complete backlog. The results can be used to discus cost and time frame of a project without doing the much dreaded "pre-study phase".

This technique has worked well for me and given surprisingly good results. Keep in mind though, this is a rough estimate and you might want to do some sort of worst case/best case before handing the results over to any business people.